SMPTE refined the work that the studios sponsored and summed up in a series of compliance documents (See: DCI Movies) done in the spirit of, “This is the minimum that we require if you want to play our movies.” As the saying goes, “Standards are great! That’s way there are so many of them.” And as an executive stated, “We can compete at the box office, but if we cooperate on standards, it benefits everyone.”

In fact, the cinema standard that is known as 2K is beyond good enough, especially now that the artists in the post-production chain have become more familiar with how to handle the technology at different stages. Most people in the world don’t get to see a first run print anyway, and a digital print (which doesn’t degrade) compares more than favorably with any film print after a few days. Plastic which is constantly brought to its melting point becomes an electrostatic dust trap, stretch and gets scratched, and the dyes desaturate.

To this date, most digital projectors are based upon a Texas Instrument (TI) chip set. Sony’s projector is based upon a different technology, and has always been 4K (4 times the resolution of 2K), but not many movies have been shipped to that standard yet. The TI OEMs will be shipping 4K equipment by the end of the year (or early next year.) Except in the largest of cinemas, most people won’t be able to tell the difference between 2K and 4K, but the standard was built wide enough to accommodate both.

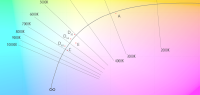

Confusing the consumer, 2K in pixels (2048 picture elements in each line) seems near enough to the 1920×1080 standard of TV know as 1080p. But there are other differences in the specification besides pixel count, such as the color sample rate, that are more important. In addition, many steps of the broadcast chain degrade the potential signal quality so that hi-def broadcast is subject to the whims of how many channels are being simultaneously broadcast, and what is happening on those channels. (For example, if a movie is playing at the same time as 15 cooking channels, it will have no problem dynamically grabbing the extra bandwidth needed to show an explosion happening with a lot of motion. But if several movies all dynamically require more bandwidth simultaneously, the transmission equipment is going to have to bend some of them in preference to others, or diminish them all.) Blu-ray will solve some of that, depending on how much other material is put on the disc with the movie. Consumers like the “other stuff” plus multiple audio versions. Studios figure that only a relative handful of aficionados optimize their delivery chain enough to be able to tell the difference. So they end up balancing away from finest possible quality for the home, while finest quality is maintained for the cinema by virtue of the standards.

With all the 3D movie releases announced, people question whether they should expect 3D in the home. It is quite possible. The restrictions or compromises are many though. First, special glasses are required, and there seems to be a reaction against the glasses. Many companies are attempting to develop technologies that allow screens to do all the work (no glasses), but when the largest company, which spent the most money over the last few years, pulls out of the market, it isn’t a good sign. (Philips pulls out of 3D research | Broadband TV News) The reality is that one person can see the 3D image if they keep their head locked in one position, and perhaps another person in another exact position, but it isn’t a marketable item.

Fortunately, there were three companies at ShoWest which offered much cooler glasses for watching 3D, including clip-ons. Since there are 3 different types of 3D technology in the theaters, it a complicated task for the consumer. At best, the cinema will hype that they have 3D, but they rarely give the detail of which type or equipment they are using.

There are several clues that humans use to establish depth data and locations of items from a natural scene. Technically, these items in the 3rd dimension are placed on what is called the `z axis’ (height and width being the x and y axes.); Matt Cowan details a few of these clues in this presentation, and there are others. Filmmakers have understood how to use these in 2D presentations for ages.

But the challenge for decades has been synchronizing the projection and display of two slightly different images, taken by cameras 6.4cm apart (the same as the `average’ eye distance), in a manner that shuts out the picture of the right eye from the left eye, and a moment later shuts out the picture of the left eye from the right eye fast enough that the eye gets info to the brain in such a way that the mind says, “Ah! Depth.” Digital projectors makes this attempt easier. It has evolved even in the last 2 years, and that evolution will continue.

There are four companies (Dolby, RealD, MasterImage and XpanD) who produce 3 different technologies for digital 3D systems for the cinema theater. Each coordinates with the projector in a slightly different manner. The projector assists by speeding up the number of frames presented to the eyes, 300% more in fact, with a technique called “triple flashing”.

For comparison, 2D film projector technology presents the image two times every 1/24th of a second. This means that the film is pulled in front of the lens every 24th of a second, allowed to settle, then a clever gate opens to project light through the film to the screen, which then closes and opens and closes again. Then the film is unlocked and pulled to the next frame. With digital 2D, motion pictures are handled the same, presenting the same picture to the screen twice per 24th of a second, then the next picture and so on. Triple flashing a 3D movie increases the rate from 48 exposures per second to 72 per second…for each eye! Every 1/24th of a second the left eye gets 3 exposures of its image, and the right eye gets 3 exposures of its slightly different image; L, R, L, R, L, R, then change the image.

Since it would be difficult to get everyone to blink one eye and then the other in the right sequence for an hour or two, the different 3D systems filter out the picture of one eye and then the other,. The Dolby systems does this (simply stated) by making one lens of the glasses an elaborate color filter for one eye, with the complimentary twin for the other eye. The projector has a spinning color wheel with matching color filters which, in effect, presents one image that one eye can’t see (but the other can), then presenting the opposite. RealD does this with a circular polarizing filter in front of the projector lens that switches clockwise then counter-clockwise, and glasses which have a pair of clockwise/counter-clockwise lenses. The XpanD system does this with an infra-red system that shutters the opposing lenses at the appropriate time. There is a 4th system named MasterImage which uses the same polarizing glasses as RealD, but with a spinning filter wheel instead of a very clever and elaborate (read, “expensive”) LCD technology.

Suffice to say that there are advantages and disadvantages to each system. Dolby’s glasses are made from a sphere of glass so that the eye’s cornea is always equidistant from the glass filter. They are also more expensive, though they have had two price drops as quantities have gotten up, from an original $50 a pair, to last year’s $25, and now $17 each. They need to be washed between uses for sanitary reasons, which provides jobs of course, but also adds to logistics and cost. XpanD glasses also need washing between use and have a battery that needs changing at some point. (Without going into the detail, the XpanD IR glasses are thus far the technology of choice for the home market, though no company should be counted out at this stage.)

RealD were the first to market and originally marketed with the studios, who provided single use glasses for each movie. Dolby sold against this by taking the ecology banner, announcing that they had developed their glasses with a coating that can be washed at least 500 times. RealD found that their glasses could be recycled to some minor extent and have now put green recycling boxes into the lobbies of the theater for patrons to drop them into for return to the factory, washing, QC and repackaging (of course, in more plastic.) There are no statistics as to how many get returned and how many get re-packaged.

A few cinemas are selling the glasses for a dollar or a euro, and seeing a lot of people take care of, and return with, their glasses. Eventually this model will be more wide-spread, with custom and prescription glasses, but the movie industry was concerned with putting up a barrier while 3D was in infancy, and glasses makers weren’t interested when the numbers were low.

Since the three systems are different, and there is no way to make a universal pair of glasses, patrons are going to have to know what type of system is used at their cinema of choice, or buy multiple pairs. In any case, the glasses are not going to be ultra-slim and sexy. In addition to being the filter for the projected light, they must also filter extraneous light. If they allow too much light from Exit signs or aisle lighting or your iPhone, the brain-trickery technology will not work. There are enough problems with 3D in general, and today’s version of it in particular, to allow any variables.

The most grievous is the amount of light getting filtered by all the lenses, coupled with the fact that half the light is being filtered from both eyes by making you blink 72 times per second. Less than 20% of the original light is seen in the eye by some systems. Up till now there hasn’t been a way to crank up the light level to compensate, and if projectionists tried, the cost in electricity goes up and life of the system would go down. This is one major reason that manufacturers of new projectors are hyping lower light levels.

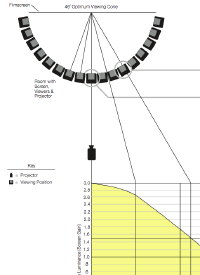

The other technical compromise with the polarizing lens systems is that they require what is called a “silver” screen to help maintain the polarization (and secondarily, to help maintain light levels.) But there is no free lunch with physics. Silver screens can be optimized, but the worst of them will have ‘hot spots’ in the room that make the side seats or upper seats see a different (darker) image while some seats have brighter or hopefully some with even the ‘correct’ amount of light. The major screen manufacturers have done a lot of work to mitigate this effect, and will tell you this problem is now virtually solved, but there are a lot of older screens out there, and incorrectly installed screens and a lot of people who have walked around and still see the effect. Sit in the center of the cinema and you will have the best odds, somewhat toward the front (the projector is higher than you are, and presuming that the screen is flat, the theoretical correct angle to your eyes is down. On the other hand, audio mixers mix from about three quarters back. YMMV.

Part 3 and 4 deals with acquisition, with and without 3D, more considerations of digital and 3Ds evolution, how to make your own master, where in the world are these digital boxes? and whether there will be 50% saturation by the end of 2011.

Cross posted to: DCinemaTools

3D Luminance Issues—Photopic, barely. Mesopic, often. Scotopic? Who knows…?

3D Luminance Issues—Photopic, barely. Mesopic, often. Scotopic? Who knows…?