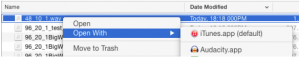

Movies at the cinema, a cultural phenomena that involves a blend of technology and groups of people, is taking one more step into Inclusiveness. The imperfect solutions for the Deaf and Hard of Hearing, the Blind and Partially Sighted are more and more part of every cinema facility – either special glasses that present words in mid-air or equipment that places words on at the end of bendable post, and earphones that transmit either a special enhanced (mono) dialog track or a different mono track that includes a narrator who describes the action. [At right: One of two brands of Closed Caption reading devices.]

Work is now nearing completion on a required new set of technologies that will help include a new group of people into the rich cultural experiences of movie-going. The tools being added are for those who use sign language to communicate. As has been common for the inclusion path, compliance with government requirements are the driving force. This time the requirement comes from Brazil, via a “Normative Instruction” that by 2018 (the time schedule has since been delayed) every commercial movie theater in Brazil must be equipped with assistive technology that guarantees the services of subtitling, descriptive subtitling, audio description and Libras.

Libras (Lingua Brasileira de Sinaisis) is the acronym for the Brazilian version of sign language for their deaf community. Libras is an official language of Brazil, used by a segment of the population estimated at 5%. The various technology tools to fulfil the sign language requirements are part of the evolving accessibility landscape. In this case, as often has happened, an entrepreneur who devised a cell phone app was first to market – by the time that the rules were formalized, cell phones belonging to the individual were not allowed to be part of the solution.

The option of using cell phones seems like a logical choice at first glance, but there are several problems with their use in a dark cinema theatre. They have never been found acceptable for other in-theatre uses, and this use case is no exception. The light that they emit is not designed to be restricted to just that one audience member (the closed caption device above does restrict the viewing angle and stray light), so it isn’t just a bother for the people in the immediate vicinity – phone light actually decreases perceived screen contrast for anyone getting a dose in their field of vision. Cell phones also don’t handle the script securely, which is a requirement of the studios which are obligated to protect the copyrights of the artists whose work they are distributing. And, of course, phones have a camera pointing at the screen – a huge problem for piracy concerns.

The fact is, there are problems with each of the various accessibility equipment offerings.

Accessibility equipment users generally don’t give 5 Stars for the choices they’ve been given, for many and varied reasons. Some of the technology – such as the device above which fits into the seat cup holder – requires the user to constantly re-focus, back-and-forth from the distant screen to the close foreground words illuminated in the special box mounted on a bendable stem. Another choice – somewhat better – is a pair of specialized glasses that present the words seemingly in mid-air with a choice of distance. While these are easier on the eyes if one holds their head in a single position, the words move around as one moves their head. Laughter causes the words to bounce. Words go sideways and in front of the action if you place your head on your neighbor’s shoulder.

[This brief review is part of a litany of credible issues, best to be reviewed in another article. It isn’t only a one-sided issue either – exhibitors point out that the equipment is expensive to buy, losses are often disproportionate to their use, and manufacturers point out that the amount of income derived doesn’t support continuous development of new ideas.)]

These (and other) technology solutions are often considered to be attempts to avoid the most simple alternative – putting the words on the screen in what is called “Open Caption”. OC is the absolute favorite of the accessibility audience. Secure, pristine, on the same focal plane, and importantly, all audience members are treated the same – no need to stand in line then be dragging around special equipment while your peers are chatting up somewhere else. But since words on screen haven’t been widely used since shortly after ‘talkies’ became common, the general audience aren’t used to them and many fear they would vehemently object. Attempts to schedule special open screening times haven’t worked in the past for various reasons.

And while open caption might be the first choice for many, it isn’t necessarily the best choice for a child, for example. Imagine the child who has been trained in sign language longer than s/he has been learning to read, who certainly can’t read as fast as those words speeding by in the new Incredibles movie. But signing? …probably better.

Sign language has been used for years on stage, or alongside public servants during announcements, or on the TV or computer screen. So in the cinema it is the next logical step. And just in time, as the studios and manufacturing technology teams are able to jump on the project when many new enabling components are available and tested and able to be integrated into new solutions.

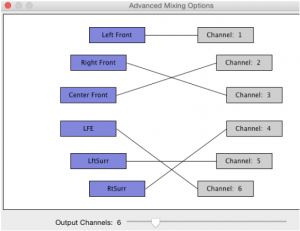

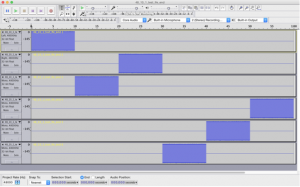

These include recently designed and documented synchronization tools that have gone through the SMTPE and ISO processes, which work well with the newly refined SMPTE compliant DCP (now shipping!, nearly worldwide – yet another story to be written.) These help make the security and packaging concerns of a new datastream more easily addressable within the existing standardized workflows. The question started as ‘how to get a new video stream into the package?’ After much discussion, the choice was made to include that stream as a portion of the audio stream.

There is history in using some of the 8 AES pairs for non-audio purposes (motion seat data, for example). And there are several good reasons for using an available, heretofore unused channel of a partly filled audio pair. Although the enforcement date has been moved back by the Brazilian Normalization group, the technology has progressed such that the main facilitator of movies for the studios, Deluxe, has announced their capability of handling this solution. The ISDCF has a Technical Document in development and under consideration which should help others, and smooth introduction worldwide if that should happen. [See: ISDCF Document 13 – Sign Language Video Encoding for Digital Cinema (a document under development) on the ISDCF Technical Documents web page.]

One major question remains. Where is the picture derived from? The choices are:

- to have a person do the signing, or

- to use the cute emoticon-style of a computer-derived avatar.

Choice one requires a person to record the signs as part of the post-production process, just as sub-titling or dubbing is done in a language that is different from the original. Of course, translating the final script of the edited movie can only be done at the very last stage of post, and like dubbing requires an actor with a particular set of skills who has to do the work, which then still has to be edited to perfection and approved and QC’d – all before the movie is released.

An avatar still requires that translation. But the tool picks words from the translation, matches them to a dictionary of sign avatars, and presents them on the screen that is placed in front of the user. If there is no avatar for that word or concept, then the word is spelled out, which is what is the common practice in live situations.

There has been a lot of debate within the community about whether avatars can transmit the required nuance. After presentations from stakeholders, the adjudicating party in Brazil reached the consensus that avatars are OK to use, though videos of actors doing the signing being preferred.

The degree of nuance in signing is very well explained by the artist Christine Sun Kim in the following TED talk. She uses interesting allegories with music and other art to get her points across. In addition to explaining, she also shows how associated but slightly different ideas get conveyed using the entire body of the signer.

Embed Code from Ted Talk

<div style=”max-width:854px”><div style=”position:relative;height:0;padding-bottom:56.25%”><iframe src=”https://embed.ted.com/talks/lang/en/christine_sun_kim_the_enchanting_music_of_sign_language” width=”854″ height=”480″ style=”position:absolute;left:0;top:0;width:100%;height:100%” frameborder=”0″ scrolling=”no” allowfullscreen></iframe></div></div>

Link from Ted Talk

Nuance is difficult enough to transmit well in written language. Most of us don’t have experience with avatars, except perhaps if we consider our interchanges with Siri and Alexa – there we notice that avatar-style tools only transmit a limited set of tone/emphasis/inflection nuance, if any at all. Avatar based signing is a new art that needs to express a lot of detail.

The realities of post production budgets and movie release times and other delivery issues get involved in this issue and the choices available. The situation with the most obstacles is getting all the final ingredients prepared for a day and date deadline. Fortunately, some of these packages can be sent after the main package and joined at the cinema, but either way the potential points of failure increase.

In addition to issues of time, issues of budget come into play. Documentary or small movies made, often made with a country’s film commission funds are often quite limited. Independants with small budgets may run out of credit cards without being able to pay for the talent required to have human signing. Avatars may be the only reasonable choice versus nothing.

At CinemaCon we saw the first of the two technologies presented by two different companies.

Riole® is a Brazilian company which developed a device that passes video from the DCP to a specialized color display that plays the video of the signing actor, as well as simultaneously presenting printed words. It uses SMPTE standard sync and security protocols and an IR emitter. Their cinema line also includes an audio description receiver/headphone system.

Riole® is a Brazilian company which developed a device that passes video from the DCP to a specialized color display that plays the video of the signing actor, as well as simultaneously presenting printed words. It uses SMPTE standard sync and security protocols and an IR emitter. Their cinema line also includes an audio description receiver/headphone system.

Dolby Labs also showed a system that is ready for production, which uses the avatar method. What we see on the picture at the right is a specially designed/inhibited ‘phone’ that the cinema chain can purchase locally. A media player gets input from the closed caption feed from the DCP, then matches that to a library of avatars. The signal is then broadcast via wifi to the ‘phones’. Dolby has refreshed their line of assistive technology equipment, and this will fit into that groups offerings.

Both companies state that they are working on future products/enhancements that will include the other technology, Riole working on avatars, Dolby working on videos.

There has been conjecture in the past as to whether other countries might follow with similar signing requirements. At this point that remains as conjecture. Nothing but rumors have been noted.

There are approximately 300 different sign languages in use around the world, including International Sign which is used at international gatherings. There are a lot of kids who can’t read subtitles, open or closed. Would they (and we) be better off seeing movies with their friends or waiting until the streaming release at home?

This is a link to a Statement from the WFD and WASLI on Use of Signing Avatars

Link for “Thank you very much” in ASL thank2.mp4

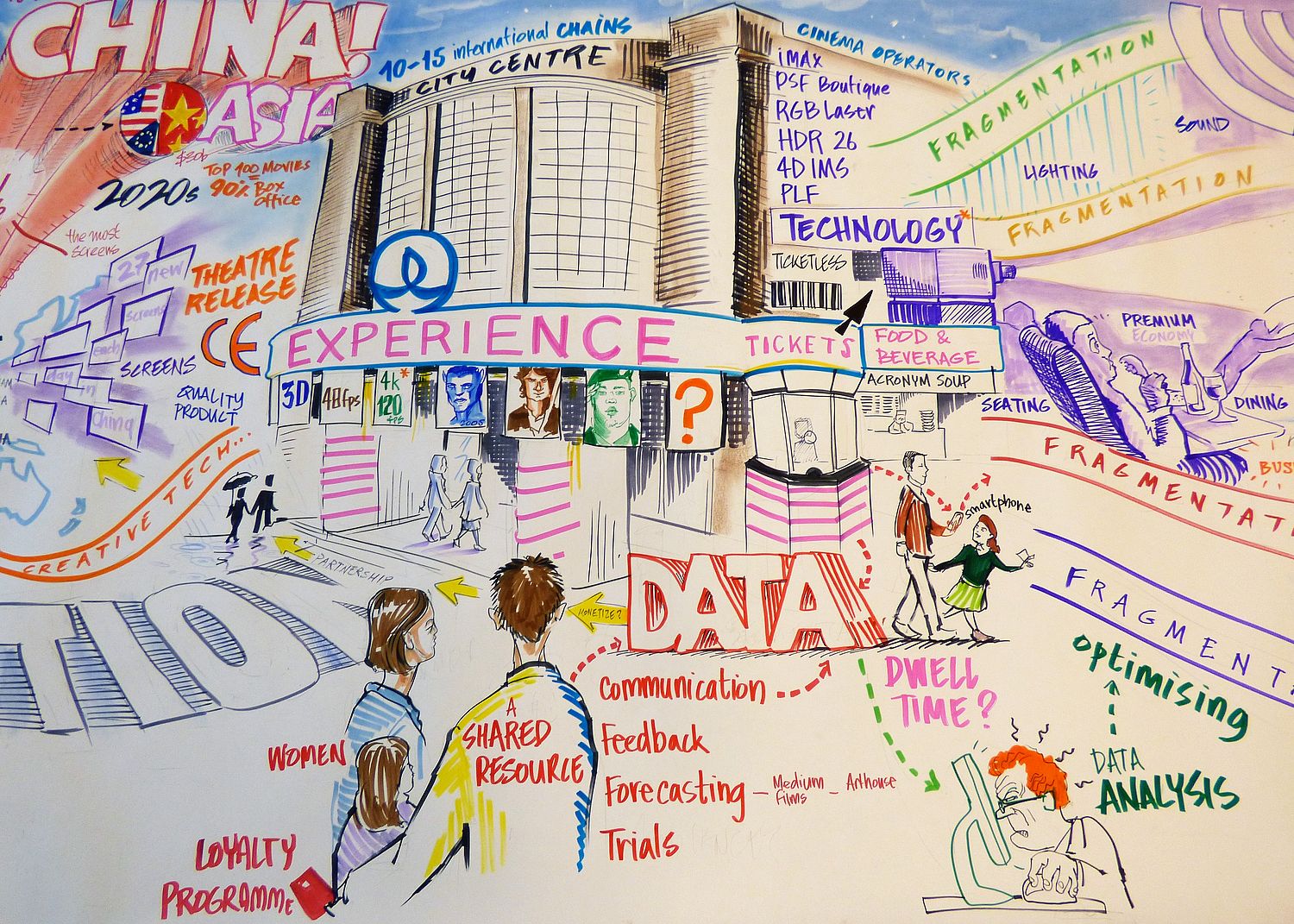

In Brussels on the 15th and 16th of October the UNIC Cinema Days will attract the exhibition industry with a non-tradefair set of discussions. There is a lot of subtlety in the reasons why last years growth in attendance (2.5%) and revenues (1.7%). Behind the discussions – last year given with the assistance of 150 industry members – the ingredients for maintaining this level of growth when the US performed dismally and the Chinese market showed cracks (fraudulent reporting of sales amid a number of other growing pains).

In Brussels on the 15th and 16th of October the UNIC Cinema Days will attract the exhibition industry with a non-tradefair set of discussions. There is a lot of subtlety in the reasons why last years growth in attendance (2.5%) and revenues (1.7%). Behind the discussions – last year given with the assistance of 150 industry members – the ingredients for maintaining this level of growth when the US performed dismally and the Chinese market showed cracks (fraudulent reporting of sales amid a number of other growing pains).